Part 2: Timing is Everything — Shifting Computation and Relaxing Guarantees

“Performance isn’t always about doing less. Sometimes, it’s about doing it at the right time — or not doing it at all.”

“Performance isn’t always about doing less. Sometimes, it’s about doing it at the right time — or not doing it at all.”

“Fast systems aren’t always the ones that do less. Sometimes they’re the ones that do it later — or earlier.”

In performance-critical systems, it’s tempting to look for clever algorithms or advanced data structures. But one of the most reliable ways to gain speed isn’t to optimise what you do — it’s to control when you do it.

Networking systems have long embraced this principle. Under tight latency budgets, they routinely defer work, batch operations, trade accuracy for throughput, or fuse responsibilities to minimise overhead.

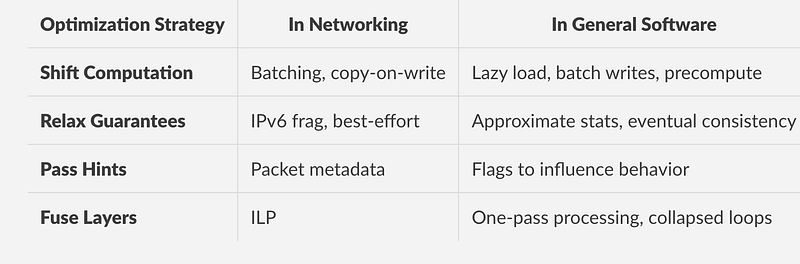

In this post, we explore four optimisation strategies that revolve around timing and specification. Though they originated in the world of protocol stacks and packet processing, these ideas apply just as well to backends, distributed systems, and even UI frameworks.

1. Shifting Work in Time — Laziness, Batching, and Pre-computation

At a high level, there are three ways to shift when computation happens:

- Do it earlier: Precompute or cache the result ahead of time.

- Do it later: Lazily defer computation until you absolutely need it.

- Do it together: Batch multiple tasks and process them as a group.

Networking systems apply all three:

- Copy-on-write delays memory duplication until one party tries to mutate data.

- Batching in packet schedulers or flow managers improves throughput by amortising cost.

- Checksum pre-computation avoids redundant work across layers.

The same ideas apply broadly:

- Lazily initialise resources only when accessed.

- Precompute expensive static data at startup.

- Batch writes or network calls to avoid repeated overhead.

public class AuditLogger {

public void log(String entry) {

try (FileWriter writer = new FileWriter("audit.log", true)) {

writer.write(entry + "\n");

} catch (IOException e) {

e.printStackTrace();

}

}

}The code above is clean but inefficient. Every call opens the file and writes immediately — introducing disk overhead on the critical path.

public class BatchingLogger {

private final List<String> buffer = new ArrayList<>();

private final ScheduledExecutorService scheduler = Executors.newSingleThreadScheduledExecutor();

public BatchingLogger() {

scheduler.scheduleAtFixedRate(this::flush, 0, 5, TimeUnit.SECONDS);

}

public void log(String entry) {

synchronized (buffer) {

buffer.add(entry);

}

}

private void flush() {

List<String> toFlush;

synchronized (buffer) {

if (buffer.isEmpty()) return;

toFlush = new ArrayList<>(buffer);

buffer.clear();

}

try (FileWriter writer = new FileWriter("audit.log", true)) {

for (String entry : toFlush) {

writer.write(entry + "\n");

}

} catch (IOException e) {

e.printStackTrace();

}

}

}You’ve now moved expensive work off the request path and amortised the cost over multiple log entries. This mirrors what routers do when they batch packets before processing them.

Relaxing Specifications — Trading Certainty for Speed

Networking systems often relax strong guarantees to meet throughput or latency constraints. For instance:

- Fair queuing ensures fairness but sacrifices simplicity and speed.

- IPv6 fragmentation offloads reassembly, simplifying the sender’s burden.

- Many “best-effort” protocols sacrifice delivery guarantees to keep the network flowing.

The lesson? In many software domains, strict correctness isn’t always required. Systems can be faster by allowing:

- Approximate results (percentile estimates, bloom filters, caches)

- Eventual consistency in distributed stores

- Bounded staleness in replicated data

If the specification allows for it, relaxing a constraint often means relaxing a bottleneck.

Suppose you want to calculate the 95th percentile latency. An exact computation involves sorting the entire dataset — expensive at scale.

Instead, you can maintain buckets and track their counts.

public class PercentileEstimator {

private final int[] buckets = new int[10]; // 0-9ms, 10-19ms, ...

public void record(int latencyMs) {

int bucket = Math.min(latencyMs / 10, buckets.length - 1);

buckets[bucket]++;

}

public int estimate95thPercentile() {

int total = Arrays.stream(buckets).sum();

int threshold = (int)(total * 0.95);

int cumulative = 0;

for (int i = 0; i < buckets.length; i++) {

cumulative += buckets[i];

if (cumulative >= threshold) {

return i * 10;

}

}

return 0;

}

}This approach is fast, online, and sufficient for dashboards. You’ve relaxed the spec (exact percentile) to gain performance and simplicity — just like many best-effort protocols.

3. Passing Hints — Better Communication Across Layers

In protocol stacks, it’s common to pass metadata alongside packets — things like ECN bits, prefetch flags, or routing hints. These “out-of-band” details help other layers optimise behavior without breaking abstraction boundaries.

In software systems, APIs often hide too much. By surfacing intent explicitly, you enable optimisation opportunities:

- Is this call part of a prefetch?

- Can this response be cached?

- Should this task be throttled?

Even small hints, expressed as parameters, flags, or metadata objects, can let downstream code make smarter decisions.

Networking protocols often pass small bits of metadata — like TTL, priority flags, or QoS levels — that allow downstream layers to optimise their behavior.

In software, most method calls are opaque. The callee doesn’t know why you’re calling it. A well-placed hint can prevent unnecessary work.

public class SearchService {

enum Mode { INTERACTIVE, PREFETCH }

public SearchResult search(String query, Mode mode) {

if (mode == Mode.INTERACTIVE) {

audit(query);

}

return runSearch(query);

}

private void audit(String query) {

System.out.println("AUDIT: " + query);

}

private SearchResult runSearch(String query) {

// Simulate search

return new SearchResult("Results for: " + query);

}

}With this design, callers can communicate intent (e.g., background prefetch), allowing the system to skip auditing, caching, or tracking. No performance tricks — just good interface design.

4. Fusing Layers — Avoiding Repetitive Work

In packet processing, every layer (IP, TCP, routing) might scan the same packet multiple times. Systems like Integrated Layer Processing (ILP) fuse these into a single pass — reducing cache misses and traversals.

The equivalent in general software is combining multiple passes into one:

- Validate, clean, and enrich data in one traversal

- Scan, group, and aggregate in a single loop

- Collapse filters and maps into fused transformations

This reduces cache misses, improves instruction locality, and avoids repeated overhead.

In the code below, each .stream() pass creates intermediate lists and overhead.

// Multiple passes: Validate → Clean → Enrich

public List<String> processMulti(List<String> rawLines) {

List<String> validated = rawLines.stream()

.filter(s -> s != null && !s.trim().isEmpty())

.collect(Collectors.toList());

List<String> cleaned = validated.stream()

.map(String::trim)

.collect(Collectors.toList());

return cleaned.stream()

.map(line -> "[Processed] " + line)

.collect(Collectors.toList());

}A better option is to collapse it into a single pass, you’ve cut the work by two-thirds, which adds up under load.

public List<String> processFused(List<String> rawLines) {

List<String> result = new ArrayList<>();

for (String line : rawLines) {

if (line == null || line.trim().isEmpty()) continue;

String cleaned = line.trim();

result.add("[Processed] " + cleaned);

}

return result;

}Summary: When You Do Work Matters as Much as What You Do

Coming Up Next:

In Part 3, we’ll look at how high-performance systems exploit the hardware, memory, and common-case behavior already available to them — and how those same strategies apply to everyday software design.

Stay tuned for Part 3: Smart by Design — Leveraging Components and Expected Use Cases