Part 1: Cut the Fat — Timeless Optimisation Lessons from Networking Systems

How networking teaches us to strip away waste, unnecessary abstractions, and misplaced generality in any high-performance system.

How networking teaches us to strip away waste, unnecessary abstractions, and misplaced generality in any high-performance system.

“Optimisation begins with not doing stupid things.”

When you think of blazing-fast systems — handling millions of requests per second with tight resource constraints — networking software probably isn’t the first place you look for inspiration. But it should be.

Networking systems operate in brutally constrained environments. Every nanosecond matters. Every byte copied unnecessarily becomes a performance bottleneck. Over the decades, systems engineers have developed a set of battle-tested techniques that are not only powerful in networking — but also deeply useful across the software stack.

In this four-part series, we’ll explore some of the most effective optimization strategies rooted in networking and show how they can be applied in general software design, from backend services and databases to compilers and embedded systems.

Today’s focus: Cutting waste.

1. Avoiding Waste: Zero-Copy I/O

Networking Insight:

In high-performance networking, copying data from buffer to buffer adds unacceptable latency. So we use zero-copy I/O, where data flows directly between source and destination (e.g., from disk to socket) without hitting intermediate buffers.

Broader Lesson:

Every time you pass data through layers, objects, or buffers that add no business value — you’re paying a tax. Ask yourself: can you move data without transforming it? Can you reuse memory? Can you avoid re-parsing?

// Bad: Decoding → mapping → copying → encoding

String body = new String(request.getBody());

Map<String, Object> parsed = parseJson(body);

String transformed = transform(parsed);

response.write(encode(transformed));

// Better: Stream directly from request to response with transformation

transformJsonStream(request.getInputStream(), response.getOutputStream());2. Avoiding Unnecessary Generality: Fbufs

Networking Insight:

Fbufs (fast buffers) were designed to avoid generic buffer abstractions that require unnecessary copying between layers. Instead, they use reference-counted, shareable buffers optimized for the data path.

Broader Lesson:

The more generic your abstractions, the more overhead you often introduce. Flexibility comes at a cost. If you know your use case, don’t over-engineer for imaginary ones.

// Over-generalized event structure

struct Event {

std::string type;

std::map<std::string, std::variant<int, float, string>> fields;

};

// Leaner, purpose-built event for a hot path

struct PhysicsCollisionEvent {

int objectId1;

int objectId2;

float impactForce;

};Avoid designing a “universal event object” that supports every possible event type, only to end up parsing or copying fields that aren’t needed.

3. Don’t Confuse Specification with Implementation: Upcalls

Networking Insight:

Upcalls allow kernel modules to invoke user-space logic without coupling layers too tightly. The kernel stays lean, and user code can still influence behavior.

Broader Lesson:

Avoid entangling how something works with what it does. This separation enables both clarity and flexibility — and often speeds up refactoring and testing.

# Tightly coupled: implementation logic embedded in the API

def fetch_and_cache_user(user_id):

user = db.query("SELECT * FROM users WHERE id = ?", user_id)

redis.set(f"user:{user_id}", user)

return user

# Better: keep caching policy separate

def fetch_user(user_id):

return db.query("SELECT * FROM users WHERE id = ?", user_id)

def get_user(user_id):

return cache.get_or_fetch(f"user:{user_id}", lambda: fetch_user(user_id))This lets you change your cache policy without touching the core logic or vice versa.

4. Efficient Routines: Optimise the Hot Path

Networking Insight:

UDP packet handling is simpler than TCP, so systems optimize it aggressively. For example, hash tables are used to speed up destination port lookups.

Broader Lesson:

Find the hot path and optimise it ruthlessly. You don’t need to optimise everything — just the right thing.

# Naive: string matching on token types

if token.type == "IDENTIFIER": ...

elif token.type == "NUMBER": ...

elif token.type == "PLUS": ...

# Better: use direct integer indexing or function dispatch table

handlers = {

TOKEN_IDENTIFIER: handle_identifier,

TOKEN_NUMBER: handle_number,

TOKEN_PLUS: handle_plus,

}

handlers[token.type](token)This technique appears in interpreters, packet routers, and even React’s reconciler engine.

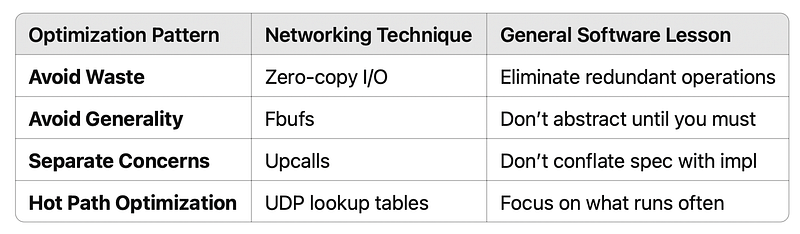

Summary: What Networking Can Teach All of Us

Coming Up Next:

In Part 2, we’ll look at how shifting computation across time — through pre-computation, lazy evaluation, or relaxed guarantees — unlocks new performance gains. We’ll cover batching, copy-on-write, fair queuing, and more.

Stay tuned for Part 2: Timing is Everything — Shifting Computation and Relaxing Guarantees