Are You Ready to Manage a Software Organization Run by Software?

Let’s flip the script.

Let’s flip the script.

For decades, the software industry has been about managing people who write software. Now, for the first time, we’re approaching a strange new future:

Managing software that builds software.

If that makes you pause, it should.

Welcome to a world where your “developers” are autonomous AI agents, your “teams” are workflows between LLMs, and your “tech leads” are orchestrators making decisions from telemetry data rather than code reviews.

The question isn’t when this future arrives. It’s already creeping into our tooling.

The real question is:

Are you ready to manage a software organization that doesn’t consist of people?

The Rise of Autonomous Software Creators

We’ve already crossed several thresholds:

- LLMs like GPT-4 and Claude can scaffold full-stack apps.

- Agents can take feedback, fix bugs, and iterate on features.

- Observability stacks can suggest rollbacks or self-healing strategies.

What was once a developer’s job is now a capability exposed via prompt.

But here’s the twist:

You’re no longer managing developers.

You’re managing systems that behave like developers.

The New Stack

If you’re managing an AI-driven development system, your role shifts dramatically. You’re no longer asking:

“Why isn’t this ticket done?”

Instead, you’re asking:

“Why did the code agent hallucinate a deprecated API?”

“Why did the deployment agent push a fix without validating traffic stability?”

“Which model version generated this insecure logic?”

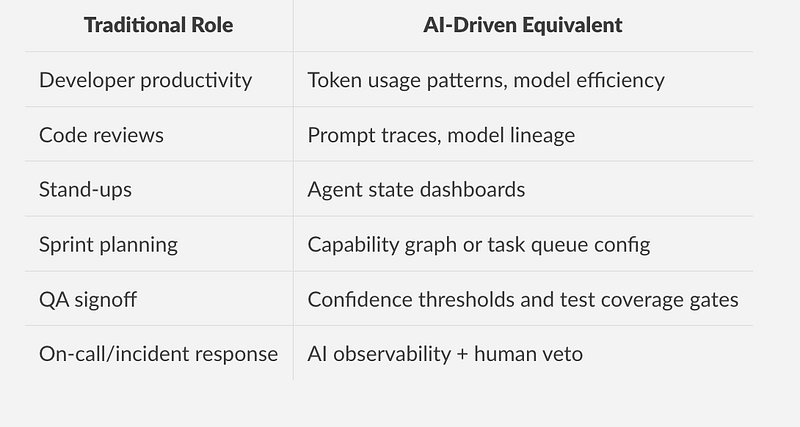

Here’s what the new management surface looks like:

Risks No One Is Talking About

Managing software that builds software isn’t just a tooling shift — it’s a governance challenge.

Loss of Intuition

- Developers debug with intuition and context.

- AI agents debug by optimizing statistical outputs.

Now ask yourself: Can you trust a team that never “knows” what it did wrong?

Opaque Decision Chains

- Why was a certain code path chosen?

- What prompt triggered a cascading change across services?

Tracing these decisions is like peeling back the layers of a probabilistic onion.

Emergent Behavior

In AI orchestration systems, unexpected combinations of agents and prompts can result in behavior that no one designed.

The challenge becomes one of containment and constraint, not direction.

The Emerging Role of Managing AI

Managing AI-native software teams needs a new archetype:

- Part Product Owner — you define outcomes, not tasks

- Part Prompt Engineer — you shape capabilities through instructions

- Part SRE — you monitor, rollback, and debug failure states

- Part Ethicist — you design accountability into autonomous systems

You’re not hiring engineers — you’re curating a fleet of software bots and teaching them how to work together.

Five Questions We Must Ask Today

- Do we have version control over the models that build our systems?

- Can we trace and reproduce a design decision made by an agent?

- How do we test and validate AI-generated systems at scale?

- What happens when the AI is wrong, but no one knows how to fix it?

- Who is accountable when software builds software — and it fails?

Closing Thought: This Is Not a Thought Experiment

Today, AI tools are copilots.

Tomorrow, they’ll be co-workers.

The day after, they may be your entire team.

So as you build your next product roadmap, hire your next engineer, or invest in your dev tool stack, ask yourself:

Are you managing people who build software — or preparing to manage software that builds software?